ffmpeg 源码简介

ffmpeg源代码中,有很多函数钩子挂载点,类似linux内核,因为ffmpeg也是c语言写得,所以这样实现,有利于接入新类型,即泛型。

也使得代码结构更清晰更容易阅读。

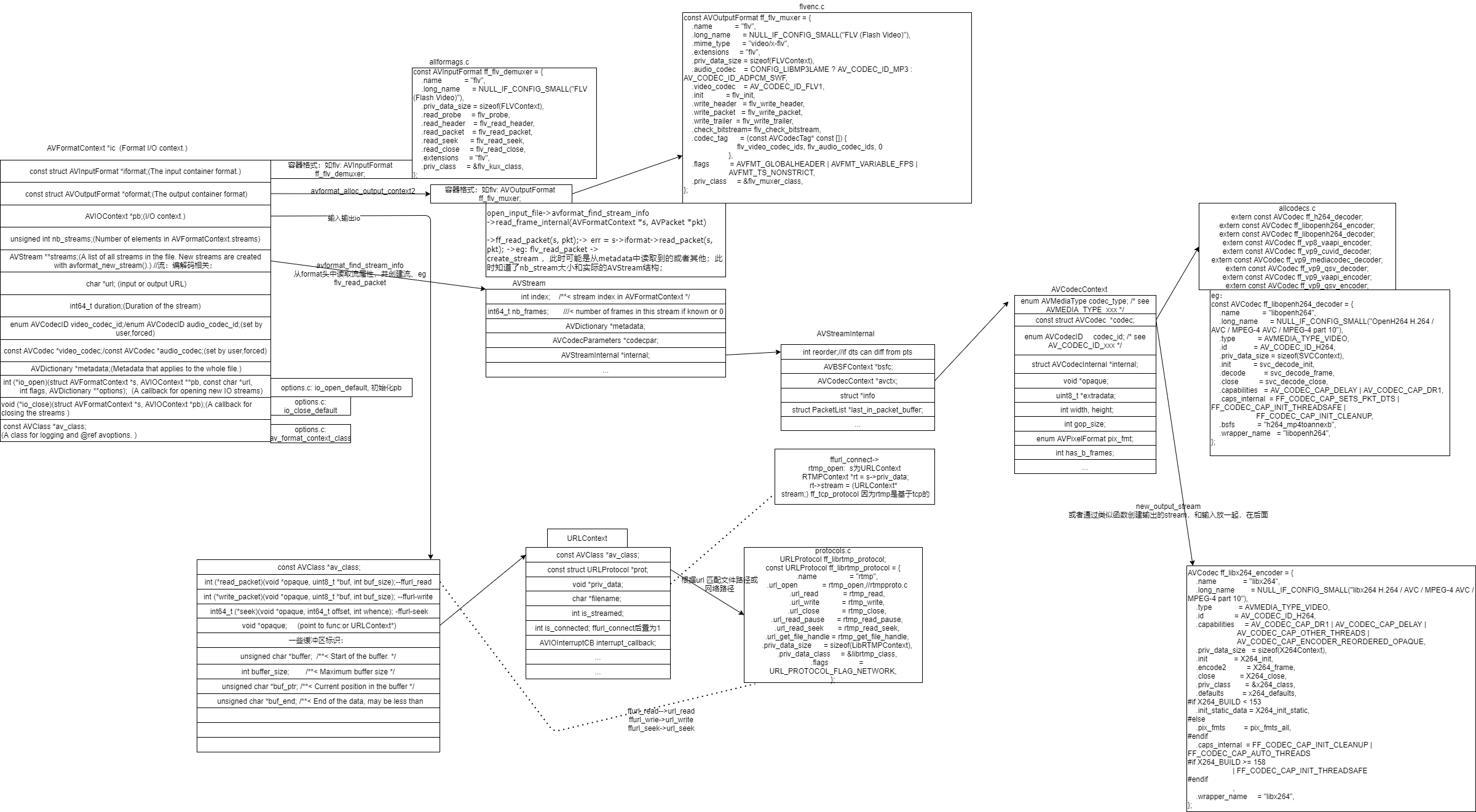

ffmpeg源码结构图

ffmpeg.c分析:

为了了解ffmpeg的框架结构,先看看ffmpeg工具是如何调用ffmpeg中的接口的。

总体:

ffmpeg.c:

1 | int main(int argc, char **argv) |

解析参数,初始化输入输出

ffmpeg_parse_options(argc, argv);//解析输入参数,包括input,output,–关键函数1

分析:

1 | int ffmpeg_parse_options(int argc, char **argv) |

初始化输入文件:

1 | static int open_input_file(OptionsContext *o, const char *filename) |

初始化输出:

1 | //输出: |

分析主循环:

1 | if (transcode() < 0)//读取接收的媒体数据,进行处理: 主循环,也就这个循环了。--关键函数2 |

分析输入url的结构框架构建:

对应结构:AVIOContext *s, 重要数据结构:

以rtmp输入为例:

可以用ffmpeg做推拉流,但是主要是client端,传入的filename携带 “rtmp” 关键字;

从上面的分析可以看到:

open_input_file的时候:会调用:io_open_default

接着去找一个URLProtocol结构的实例,然后挂载,那么具体是什么样的?

ffmpeg在编译后会生成一个:”libavformat/protocol_list.c” 文件,包含一个URLProtocol类型

的数组:

如:

1 | static const URLProtocol * const url_protocols[] = { |

看下ff_rtmp_protocol:如何被定义:rtmpproto.c:

1 | #define RTMP_PROTOCOL_0(flavor) |

跟踪如何找到URLProtocol 实例:

1 | io_open_default-> |

实际运行bt:

1 | 推流到url 或者说输出的场景: |

分析读取文件解析容器格式框架:

对应:avformat_open_input ,对应上面调用init_input下子过程

读取文件内容解析成flv或其他容器格式: AVPacket的过程:

对应结构:AVInputFormat

相关结构:猜测容器格式?通过扩展名。 allformats.c 中罗列了所有的扩展名下的容器格式处理结构;

1 | extern const AVOutputFormat ff_flac_muxer; |

demuxer_list结构,在编译后生成:libavformat/demuxer_list.c:

1 | static const AVInputFormat * const demuxer_list[] = { |

相关逻辑深入:

1 | const AVInputFormat *av_probe_input_format2(const AVProbeData *pd, |

寻找解码器的过程:

对应open_input_file的子过程:avformat_find_stream_info

关键结构:AVCodec

allcodecs.c

1 | extern const AVCodec ff_h264_amf_encoder; |

codec_list数组,libavcodec/codec_list.c

1 | static const AVCodec * const codec_list[] = { |

关键逻辑:

1 | codec = find_probe_decoder(ic, st, st->codecpar->codec_id); |

一些结构解释:

AVStream 是流的结构,一个流一个实例,其中这个结构中有AVStreamInternal ,其中又AVCodecContext,

即含有编码解码相关结构;

一个场景例子:

输入一个url rtmp/其他的网上的流, 转码,转封装后,推流到网上。

1 | -re -i source.flv -c copy -f flv -y rtmp://localhost:1936/live/livestream |

关于封装,更多:

封装和头: mux.c

1 | int avformat_write_header(AVFormatContext *s, AVDictionary **options) |

音频格式中的头怎么封装的? 其实是 AVFormatContext 中的AVFormatContext 成员,

在识别文件名或输入的格式后,去找对应的AVOutputFormat ,预定义的:

如: adtsenc.c:

1 | const AVOutputFormat ff_adts_muxer = { |

更多格式:

allformats.c:

还有类似flac等,都可以在这里查到解封装和封装,主要是头,和编解码无关。

编解码更多:

MPEG2编码器对应的AVCodec结构体ff_mpeg2video_encoder:

1 | init() -> encode_init() |

mpeg12enc.c:

1 | const AVCodec ff_mpeg1video_encoder = { |

more: 见源码和官网