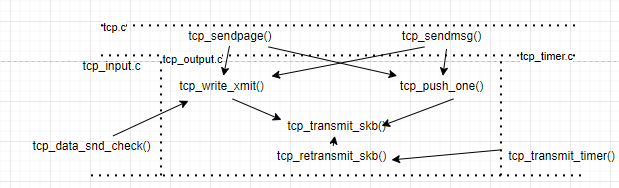

linux tcp数据发送框架

- linux协议栈tcp发送流程:

tcp.c: tcp_sendmsg()函数分析:

1 | 0 处理tcp的一些特性的:fastopen,repair(连接迁移收尾),等 |

- 几个关键概念:

- 链路mtu:mtu是链路以太网包载荷大小,一般和路由器相关,一般是1500,所以除开ip头20B,tcp头20B,tcp payload一般为1460

- tcp的mss:因为app一般不支持分片,所以最好不要超mtu下发,但上层可能超额带下来,这个时候需要tcp seg不要超过一定值,但不是一直都是1460,对隧道或其他技术,会占用一些字节,导致tcp payload<1460,即tcp mss

- tcp mss可以通过三次握手得到:如client: syn–>1460,但server返回的syn ack带的mss是1420如此,client调整自己的mss

https://www.imperva.com/blog/mtu-mss-explained/

注意:tcp mss是放在tcp option内。上层可以通过ioctl等设置

linux内核发送数据和窗口的关系,窗口如何影响数据发送

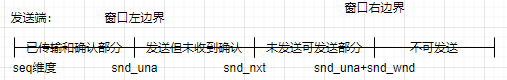

先来看看滑动窗口:

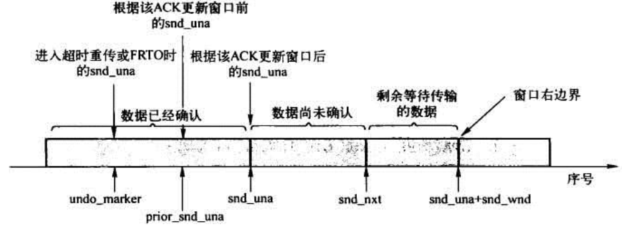

结合代码里一些变量的窗口

可以看到,当snd_una+snd_wnd即窗口>snd_nxt时,可以继续发送数据,否则此时不能继续发。

那snd_wnd的大小怎么确定? 单位是什么?

snd_una是窗口左边界,是一个sequence,表示在输出的段中,最早一个未确认段的序号。一般为窗口的左边界

snd_nxt 等待发送的下一个tcp段的序号。

snd_wnd 接收方提供的接收窗口大小,即发送方发送窗口大小。其值来自ack所带的接收方窗口大小*opt中的窗口扩大因子,算出来也是一个序号值。

snd_cwnd 拥塞窗口大小,是此时可以在空中存在的段数量。

来看看拥塞窗口和发送窗口怎么限制发送:

在一次sendMsg调用中:

1 | 基于5.13.1: |

至此,知道了拥塞窗口和接收窗口如何影响当次sendmsg的数据发送量,有个疑问,如果是依赖上层调用sendMsg来驱动发送,那会有一些包遗留着一直进不去窗口来发送如果

上层之后不再调用sendMsg, 故,这里sendMsg会把包放到发送队列,在收到ack后,会驱动去判断队列是否还有包,有则发送。

详细看这个函数的调用流程:

1 | static inline void tcp_data_snd_check(struct sock *sk) |

接收方窗口更新

简略介绍:

1 | static int tcp_ack(struct sock *sk, const struct sk_buff *skb, int flag) |

拥塞算法和拥塞窗口简介

拥塞算法是通过ack等机制间接了解网络状态后,通过调整拥塞控制窗口,进而调整输出到网络上的流量,来达到尝试减少网络拥塞程度的目的的算法。

linux5.13.1拥塞控制框架

- 拥塞算法注册和初始化

- 模块生成和注册,每个拥塞控制算法都是一个模块的形式,内核进行模块注册和卸载;

例如cubic:

module_init(cubictcp_register);

module_exit(cubictcp_unregister);

bbr:

module_init(bbr_register);

module_exit(bbr_unregister); - 在初始化或程序调用setopt接口后,选择某一种拥塞控制算法,会进而进行初始化:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34static int do_tcp_setsockopt(struct sock *sk, int level, int optname,

sockptr_t optval, unsigned int optlen)

err = tcp_set_congestion_control(sk, name, true,ns_capable(sock_net(sk)->user_ns)

是在setopt调用下来后,将选择的拥塞控制挂载上去,然后调用init函数

tcp_cong.c:

tcp_set_congestion_control->

tcp_reinit_congestion_control->

tcp_init_congestion_control->

icsk->icsk_ca_ops->init(sk);--进而调用到具体拥塞控制算法的初始化函数

如:cubic

static struct tcp_congestion_ops cubictcp __read_mostly = {

.init = cubictcp_init,

.ssthresh = cubictcp_recalc_ssthresh,

.cong_avoid = cubictcp_cong_avoid,

.set_state = cubictcp_state,

.undo_cwnd = tcp_reno_undo_cwnd,

.cwnd_event = cubictcp_cwnd_event,

.pkts_acked = cubictcp_acked,

.owner = THIS_MODULE,

.name = "cubic",

};

static void cubictcp_init(struct sock *sk)

{

struct bictcp *ca = inet_csk_ca(sk);//这个结构放在sock中,通过这样获取到

bictcp_reset(ca);//全部置为0:memset(ca, 0, offsetof(struct bictcp, unused)); ca->found = 0;

if (hystart)//默认为1

bictcp_hystart_reset(sk);

if (!hystart && initial_ssthresh)

tcp_sk(sk)->snd_ssthresh = initial_ssthresh;

}

- 拥塞算法整体流程和驱动

虽然内核支持多种拥塞控制算法,但却受到协议栈调用的诸多限制,导致算法本身不是很灵活,也难以合适的接入,依赖外部流程从多个地方调用拥塞

控制算法模块,拥塞控制状态也是外部控制,耦合性太强,这里的外部是相对于拥塞控制模块而言,即协议栈;

而且拥塞窗口不完全由拥塞控制算法控制,如在丢包状态下,协议栈也会更新拥塞控制窗口。

就驱动拥塞控制算法即,什么时候调用拥塞控制算法相关函数:

- 收到ack,走tcp_ack

- timer 触发重传,进入loss状态处理时;

- 拥塞算法的各个阶段解释:

- 慢启动:对应状态:TCP_CA_Open

- 拥塞避免:对应状态:TCP_CA_Open,当慢启动阶段的拥塞窗口到达慢启动阈值,ssthresh,此时进入拥塞避免阶段,增长速度放缓;

- 超时或异常,和在恢复中的阶段:对应状态:

TCP_CA_Disorder = 1,

TCP_CA_CWR = 2,

TCP_CA_Recovery = 3,

TCP_CA_Loss = 4

这几个阶段的代码解释:这几个状态下拥塞控制窗口基本不增长甚至减小,具体见下分析。但个人感觉这几个状态的处理和之间的转换界限不够清晰,所以我统一归到超时异常阶段的处理。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39/*

* Sender's congestion state indicating normal or abnormal situations

* in the last round of packets sent. The state is driven by the ACK

* information and timer events.

*/

enum tcp_ca_state {

/*

* Nothing bad has been observed recently.

* No apparent reordering, packet loss, or ECN marks.

*/

TCP_CA_Open = 0,

/*

* The sender enters disordered state when it has received DUPACKs or

* SACKs in the last round of packets sent. This could be due to packet

* loss or reordering but needs further information to confirm packets

* have been lost.

*/

TCP_CA_Disorder = 1,

/*

* The sender enters Congestion Window Reduction (CWR) state when it

* has received ACKs with ECN-ECE marks, or has experienced congestion

* or packet discard on the sender host (e.g. qdisc).

*/

TCP_CA_CWR = 2,

/*

* The sender is in fast recovery and retransmitting lost packets,

* typically triggered by ACK events.

*/

TCP_CA_Recovery = 3,

/*

* The sender is in loss recovery triggered by retransmission timeout.

*/

TCP_CA_Loss = 4

};

- 拥塞控制模块提供了哪些可实现控制的接口:

基于5.13.1,英文注释很清晰;1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51struct tcp_congestion_ops {

/* fast path fields are put first to fill one cache line */

/* return slow start threshold (required) */

u32 (*ssthresh)(struct sock *sk);

/* do new cwnd calculation (required) */

void (*cong_avoid)(struct sock *sk, u32 ack, u32 acked);

/* call before changing ca_state (optional) */

void (*set_state)(struct sock *sk, u8 new_state);

/* call when cwnd event occurs (optional) */

void (*cwnd_event)(struct sock *sk, enum tcp_ca_event ev);

/* call when ack arrives (optional) */

void (*in_ack_event)(struct sock *sk, u32 flags);

/* hook for packet ack accounting (optional) */

void (*pkts_acked)(struct sock *sk, const struct ack_sample *sample);

/* override sysctl_tcp_min_tso_segs */

u32 (*min_tso_segs)(struct sock *sk);

/* call when packets are delivered to update cwnd and pacing rate,

* after all the ca_state processing. (optional)

*/

void (*cong_control)(struct sock *sk, const struct rate_sample *rs);

/* new value of cwnd after loss (required) */

u32 (*undo_cwnd)(struct sock *sk);

/* returns the multiplier used in tcp_sndbuf_expand (optional) */

u32 (*sndbuf_expand)(struct sock *sk);

/* control/slow paths put last */

/* get info for inet_diag (optional) */

size_t (*get_info)(struct sock *sk, u32 ext, int *attr,

union tcp_cc_info *info);

char name[TCP_CA_NAME_MAX];

struct module *owner;

struct list_head list;

u32 key;

u32 flags;

/* initialize private data (optional) */

void (*init)(struct sock *sk);

/* cleanup private data (optional) */

void (*release)(struct sock *sk);

} ____cacheline_aligned_in_smp; - 拥塞控制各个阶段代码简要分析:

慢启动:

最开始的时候是TCP_CA_Open,虽然后面的状态也会调用这个接口,但是判断后实际不会去增长拥塞窗口。

由ack驱动:在ack对seq和ack_seq检查以及其他检查通过后,调用

tcp_cong_control(sk, ack, delivered, flag, sack_state.rate);//拥塞控制处理1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39/* The "ultimate" congestion control function that aims to replace the rigid

* cwnd increase and decrease control (tcp_cong_avoid,tcp_*cwnd_reduction).

* It's called toward the end of processing an ACK with precise rate

* information. All transmission or retransmission are delayed afterwards.

*/

static void tcp_cong_control(struct sock *sk, u32 ack, u32 acked_sacked,

int flag, const struct rate_sample *rs)

{

const struct inet_connection_sock *icsk = inet_csk(sk);

if (icsk->icsk_ca_ops->cong_control) {

icsk->icsk_ca_ops->cong_control(sk, rs);//有些拥塞算法实现了这个接口,接管更多控制

return;

}

if (tcp_in_cwnd_reduction(sk)) { //减小拥塞控制窗口

/* Reduce cwnd if state mandates */

tcp_cwnd_reduction(sk, acked_sacked, rs->losses, flag);

} else if (tcp_may_raise_cwnd(sk, flag)) { //增大拥塞控制窗口

/* Advance cwnd if state allows */

tcp_cong_avoid(sk, ack, acked_sacked);//会进一步调用拥塞控制算法

}

tcp_update_pacing_rate(sk);//这个应该bbr用的

}

static inline bool tcp_in_cwnd_reduction(const struct sock *sk)

{

return (TCPF_CA_CWR | TCPF_CA_Recovery) &

(1 << inet_csk(sk)->icsk_ca_state);//(0b100 | 0b110) & (1<<state),即为 0b110 & (1<<state)由上分析只有

(disorder,cwr,recovery)会返回true,open和loss返回false

}

static void tcp_cong_avoid(struct sock *sk, u32 ack, u32 acked)

{

const struct inet_connection_sock *icsk = inet_csk(sk);

icsk->icsk_ca_ops->cong_avoid(sk, ack, acked);//拥塞控制算法模块的慢启动和拥塞避免函数

tcp_sk(sk)->snd_cwnd_stamp = tcp_jiffies32;//记下时间

}拥塞避免:调用的接口和时机同上;

但有个阈值更新的函数,会影响进入拥塞避免的时机:

例如,在进入丢包状态:

void tcp_enter_loss(struct sock *sk)

–>tp->snd_ssthresh = icsk->icsk_ca_ops->ssthresh(sk);//这里会调用拥塞控制模块实现的接口,一般会去更新慢启动阈值。

- 超时或异常,和在恢复中的阶段:对应状态:

a: TCP_CA_Disorder = 1

如何进入:在即将恢复进入TCP_CA_Open状态前,会可能进入Disorder;1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55tcp_try_to_open

or

case TCP_CA_Recovery:

tcp_try_keep_open

static void tcp_try_keep_open(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

int state = TCP_CA_Open;

if (tcp_left_out(tp) || tcp_any_retrans_done(sk))

state = TCP_CA_Disorder;

if (inet_csk(sk)->icsk_ca_state != state) {

tcp_set_ca_state(sk, state);

tp->high_seq = tp->snd_nxt;

}

}

在这个状态下处理走default,会尝试进入Open或recovery:

tcp_ack->

static void tcp_fastretrans_alert

/* E. Process state. *///处理拥塞控制的各个状态;

switch (icsk->icsk_ca_state) {

default:

if (tcp_is_reno(tp)) {

if (flag & FLAG_SND_UNA_ADVANCED)

tcp_reset_reno_sack(tp);

tcp_add_reno_sack(sk, num_dupack, ece_ack);

}

if (icsk->icsk_ca_state <= TCP_CA_Disorder)

tcp_try_undo_dsack(sk);

tcp_identify_packet_loss(sk, ack_flag);

if (!tcp_time_to_recover(sk, flag)) {

tcp_try_to_open(sk, flag);

return;

}

/* MTU probe failure: don't reduce cwnd */

if (icsk->icsk_ca_state < TCP_CA_CWR &&

icsk->icsk_mtup.probe_size &&

tp->snd_una == tp->mtu_probe.probe_seq_start) {

tcp_mtup_probe_failed(sk);

/* Restores the reduction we did in tcp_mtup_probe() */

tp->snd_cwnd++;

tcp_simple_retransmit(sk);

return;

}

/* Otherwise enter Recovery state */

tcp_enter_recovery(sk, ece_ack);

fast_rexmit = 1;

}

b TCP_CA_CWR = 2

什么时候进入cwr状态:

发送失败或者收到显示ecn ,进入cwr拥塞控制窗口减少的状态;

- tcp_transmit_skb->若发送失败>0 tcp_enter_cwr();

- tcp_ack->tcp_fastretrans_alert()->tcp_try_to_open当flag带ecn时->tcp_enter_cwr

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32/* Enter CWR state. Disable cwnd undo since congestion is proven with ECN */

void tcp_enter_cwr(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

tp->prior_ssthresh = 0;

if (inet_csk(sk)->icsk_ca_state < TCP_CA_CWR) {

tp->undo_marker = 0;

tcp_init_cwnd_reduction(sk);

tcp_set_ca_state(sk, TCP_CA_CWR);

}

}

在这个状态下的处理:

1) 尝试状态终结:

else if (!before(tp->snd_una, tp->high_seq)) {//high_seq是拥塞时的snd_nxt即最后一个发送未确认

//标识重传队列结尾,这个条件时snd_una>tp->hith_seq

switch (icsk->icsk_ca_state) {

case TCP_CA_CWR:

/* CWR is to be held something *above* high_seq

* is ACKed for CWR bit to reach receiver. */

if (tp->snd_una != tp->high_seq) {//都ack了,可以重入open

tcp_end_cwnd_reduction(sk);//重置慢启动阈值等

tcp_set_ca_state(sk, TCP_CA_Open);

}

break;

2) 状态处理:

switch (icsk->icsk_ca_state) {

default:同上个状态的处理

这个状态下对拥塞窗口的处理见tcp_cwnd_reduction

c TCP_CA_Recovery = 3:

如何进入:不考虑rack的话,从其他状态转换而来:

tcp_enter_recovery

1 | tcp_fastretrans_alert-> |

状态处理:

1 | 1) 尝试终结: |

d TCP_CA_Loss = 4

如何进入: 触发重传定时器时:

1 | /* Enter Loss state. */ |

状态处理:

1 | case TCP_CA_Loss: |

PS:linux定时器机制和重传定时器简单分析:

实现主要在timer.c,由时钟软中断触发,检查是否超时,再调用回调;

1 | 创建: |

至此,可以总结为:

在open下做慢启动和拥塞避免,此时拥塞窗口增长;

在disorder/cwr/recovery,统一tcp_cwnd_reduction进行拥塞窗口减量减少;

在loss,会有单独的拥塞窗口更新,见上。

各个状态处理对应事项,并可以重回open正常状态。

而各个拥塞控制算法,控制的是:

1 慢启动、拥塞避免的增长逻辑

2 实现了cong_control接口的,可以控制拥塞窗口减小逻辑;

3 慢启动阈值更新逻辑;

对拥塞控制状态转换和处理则无法控制。

就着上面的分析,看看具体的拥塞控制算法;

cubic拥塞控制算法

cubic相比于之前的拥塞控制算法,主要是通过更激进的拥塞窗口增长逻辑来提高拥塞下的网络利用率;

网上有很多文章翻译了rfc,例如https://www.jianshu.com/p/d4db63e2b519

这里不再冗余;

直接看对应代码; tcp_cubic.c:

cubic实现的接口:

1

2

3

4

5

6

7

8

9

10

11static struct tcp_congestion_ops cubictcp __read_mostly = {

.init = cubictcp_init,//初始化

.ssthresh = cubictcp_recalc_ssthresh,//慢启动阈值更新

.cong_avoid = cubictcp_cong_avoid, //慢启动和拥塞避免窗口更新

.set_state = cubictcp_state,//状态转换的调用函数

.undo_cwnd = tcp_reno_undo_cwnd,// new value of cwnd after loss (required)

.cwnd_event = cubictcp_cwnd_event,//cwnd 事件发生处理函数

.pkts_acked = cubictcp_acked,//packet ack accounting

.owner = THIS_MODULE,

.name = "cubic",

};初始化:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22static void cubictcp_init(struct sock *sk)

{

struct bictcp *ca = inet_csk_ca(sk);//这个结构放在sock中,通过这样获取到

bictcp_reset(ca);//全部置为0:memset(ca, 0, offsetof(struct bictcp, unused)); ca->found = 0;

if (hystart)//默认为1

bictcp_hystart_reset(sk);

if (!hystart && initial_ssthresh)

tcp_sk(sk)->snd_ssthresh = initial_ssthresh;

}

static inline void bictcp_hystart_reset(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

struct bictcp *ca = inet_csk_ca(sk);

ca->round_start = ca->last_ack = bictcp_clock_us(sk);//tcp_sk(sk)->tcp_mstamp;//最近接收或发送的包的时间戳,用于计算rtt

ca->end_seq = tp->snd_nxt;//下一个我们发送的seqnum

ca->curr_rtt = ~0U;

ca->sample_cnt = 0;

}慢启动和拥塞避免

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207每次收到ack,调用tcp_ack会进而调用这个,来更新拥塞窗口;

static void cubictcp_cong_avoid(struct sock *sk, u32 ack, u32 acked)//acked为当前的ack包acked或sacked了多少个包。由于延迟确认机制,一个ack可以acked多个包

{

struct tcp_sock *tp = tcp_sk(sk);

struct bictcp *ca = inet_csk_ca(sk);

if (!tcp_is_cwnd_limited(sk))//当发送速率比较慢,即当前拥塞窗口足以应对窗口滑动和包发送速率,此时没必要增大拥塞窗口;

return;

if (tcp_in_slow_start(tp)) {

if (hystart && after(ack, ca->end_seq))//ack在拥塞记录的end_seq后了,重置拥塞的相关参数;

bictcp_hystart_reset(sk);

acked = tcp_slow_start(tp, acked);

if (!acked) //没到慢启动阈值,返回

return;

}

bictcp_update(ca, tp->snd_cwnd, acked);//拥塞避免

tcp_cong_avoid_ai(tp, ca->cnt, acked);

}

/* We follow the spirit本意本质 of RFC2861 to validate cwnd but implement a more

* flexible approach. The RFC suggests cwnd should not be raised unless

* it was fully used previously. 用满了才增加窗口,And that's exactly what we do in

* congestion avoidance mode. But in slow start we allow cwnd to grow

* as long as the application has used half the cwnd.//但在慢启动下,我们允许在用了窗口一半时就增长

* Example :

* cwnd is 10 (IW10), but application sends 9 frames.

* We allow cwnd to reach 18 when all frames are ACKed.

* This check is safe because it's as aggressive as slow start which already

* risks 100% overshoot. The advantage is that we discourage application to

* either send more filler packets or data to artificially blow up the cwnd

* usage, and allow application-limited process to probe bw more aggressively.

*/

static inline bool tcp_is_cwnd_limited(const struct sock *sk)

{

const struct tcp_sock *tp = tcp_sk(sk);

/* If in slow start, ensure cwnd grows to twice what was ACKed. */

if (tcp_in_slow_start(tp))//如何判断在慢启动:tp->snd_cwnd < tp->snd_ssthresh; 拥塞窗口小于阈值

return tp->snd_cwnd < 2 * tp->max_packets_out;//当窗口小于max_packets_out的两倍时,不做增长;

//max_packets_out:在最后一个窗口中发送的最大包数。

return tp->is_cwnd_limited;

}

tcp_cong.c

/* Slow start is used when congestion window is no greater than the slow start

* threshold. We base on RFC2581 and also handle stretch ACKs properly.

* We do not implement RFC3465 Appropriate Byte Counting (ABC) per se but

* something better;) a packet is only considered (s)acked in its entirety to

* defend the ACK attacks described in the RFC. Slow start processes a stretch

* ACK of degree N as if N acks of degree 1 are received back to back except

* ABC caps N to 2. Slow start exits when cwnd grows over ssthresh and

* returns the leftover acks to adjust cwnd in congestion avoidance mode.

*/

//慢启动被用于当拥塞窗口不大于慢启动阈值时,我们基于2581 也处理,即cubic并未改变慢启动逻辑

u32 tcp_slow_start(struct tcp_sock *tp, u32 acked)

{

u32 cwnd = min(tp->snd_cwnd + acked, tp->snd_ssthresh);//取拥塞窗口+acked,拥塞阈值的最小值作为cwnd

acked -= cwnd - tp->snd_cwnd;//一般来讲是0,否则当选择阈值时,则>0,即此时已经到阈值了

tp->snd_cwnd = min(cwnd, tp->snd_cwnd_clamp);//u32 snd_cwnd_clamp; /* Do not allow snd_cwnd to grow above this */

return acked;

}

//对cubic来说,进入拥塞避免阶段,会采用另一种方式去计算没收到一个acked应该增加多少大小窗口

//有三种情况:凹区域,凸区域,tcp友好区域;具体:https://www.jianshu.com/p/d4db63e2b519 ,以下函数就是为了计算每收到一个ACK,cwnd 必须增加的数量,

//通过识别是哪种情况,来增加;

// ca->cnt即是要求。

/*

* Compute congestion window to use.

*/

static inline void bictcp_update(struct bictcp *ca, u32 cwnd, u32 acked)

{

u32 delta, bic_target, max_cnt;

u64 offs, t;

ca->ack_cnt += acked; /* count the number of ACKed packets */

if (ca->last_cwnd == cwnd &&

(s32)(tcp_jiffies32 - ca->last_time) <= HZ / 32)

return;

/* The CUBIC function can update ca->cnt at most once per jiffy.

* On all cwnd reduction events, ca->epoch_start is set to 0,

* which will force a recalculation of ca->cnt.

*/

if (ca->epoch_start && tcp_jiffies32 == ca->last_time)

goto tcp_friendliness;

ca->last_cwnd = cwnd;

ca->last_time = tcp_jiffies32;

if (ca->epoch_start == 0) {

ca->epoch_start = tcp_jiffies32; /* record beginning */

ca->ack_cnt = acked; /* start counting */

ca->tcp_cwnd = cwnd; /* syn with cubic */

if (ca->last_max_cwnd <= cwnd) {

ca->bic_K = 0;

ca->bic_origin_point = cwnd;

} else {

/* Compute new K based on

* (wmax-cwnd) * (srtt>>3 / HZ) / c * 2^(3*bictcp_HZ)

*/

ca->bic_K = cubic_root(cube_factor

* (ca->last_max_cwnd - cwnd));

ca->bic_origin_point = ca->last_max_cwnd;

}

}

/* cubic function - calc*/

/* calculate c * time^3 / rtt,

* while considering overflow in calculation of time^3

* (so time^3 is done by using 64 bit)

* and without the support of division of 64bit numbers

* (so all divisions are done by using 32 bit)

* also NOTE the unit of those veriables

* time = (t - K) / 2^bictcp_HZ

* c = bic_scale >> 10

* rtt = (srtt >> 3) / HZ

* !!! The following code does not have overflow problems,

* if the cwnd < 1 million packets !!!

*/

t = (s32)(tcp_jiffies32 - ca->epoch_start);

t += usecs_to_jiffies(ca->delay_min);

/* change the unit from HZ to bictcp_HZ */

t <<= BICTCP_HZ;

do_div(t, HZ);

if (t < ca->bic_K) /* t - K */

offs = ca->bic_K - t;

else

offs = t - ca->bic_K;

/* c/rtt * (t-K)^3 */

delta = (cube_rtt_scale * offs * offs * offs) >> (10+3*BICTCP_HZ);

if (t < ca->bic_K) /* below origin*/

bic_target = ca->bic_origin_point - delta;

else /* above origin*/

bic_target = ca->bic_origin_point + delta;

/* cubic function - calc bictcp_cnt*/

if (bic_target > cwnd) {

ca->cnt = cwnd / (bic_target - cwnd);

} else {

ca->cnt = 100 * cwnd; /* very small increment*/

}

/*

* The initial growth of cubic function may be too conservative

* when the available bandwidth is still unknown.

*/

if (ca->last_max_cwnd == 0 && ca->cnt > 20)

ca->cnt = 20; /* increase cwnd 5% per RTT */

tcp_friendliness:

/* TCP Friendly */

if (tcp_friendliness) {

u32 scale = beta_scale;

delta = (cwnd * scale) >> 3;

while (ca->ack_cnt > delta) { /* update tcp cwnd */

ca->ack_cnt -= delta;

ca->tcp_cwnd++;

}

if (ca->tcp_cwnd > cwnd) { /* if bic is slower than tcp */

delta = ca->tcp_cwnd - cwnd;

max_cnt = cwnd / delta;

if (ca->cnt > max_cnt)

ca->cnt = max_cnt;

}

}

/* The maximum rate of cwnd increase CUBIC allows is 1 packet per

* 2 packets ACKed, meaning cwnd grows at 1.5x per RTT.

*/

ca->cnt = max(ca->cnt, 2U);

}

//拥塞避免阶段也是要增加 cwnd,只是增加的速度问题,速度由上个函数update,这个函数用来更新cwnd,看起来加的很小。

/* In theory this is tp->snd_cwnd += 1 / tp->snd_cwnd (or alternative w),

* for every packet that was ACKed.

*/

void tcp_cong_avoid_ai(struct tcp_sock *tp, u32 w, u32 acked)

{

/* If credits accumulated at a higher w, apply them gently now. */

if (tp->snd_cwnd_cnt >= w) {

tp->snd_cwnd_cnt = 0;

tp->snd_cwnd++;

}

tp->snd_cwnd_cnt += acked;

if (tp->snd_cwnd_cnt >= w) {

u32 delta = tp->snd_cwnd_cnt / w;

tp->snd_cwnd_cnt -= delta * w;

tp->snd_cwnd += delta;

}

tp->snd_cwnd = min(tp->snd_cwnd, tp->snd_cwnd_clamp);

}窗口减小,依然使用tcp_input.c实现,即外部函数:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25void tcp_cwnd_reduction(struct sock *sk, int newly_acked_sacked, int newly_lost, int flag)

{

struct tcp_sock *tp = tcp_sk(sk);

int sndcnt = 0;

int delta = tp->snd_ssthresh - tcp_packets_in_flight(tp);

if (newly_acked_sacked <= 0 || WARN_ON_ONCE(!tp->prior_cwnd))

return;

tp->prr_delivered += newly_acked_sacked;

if (delta < 0) {

u64 dividend = (u64)tp->snd_ssthresh * tp->prr_delivered +

tp->prior_cwnd - 1;

sndcnt = div_u64(dividend, tp->prior_cwnd) - tp->prr_out;

} else if (flag & FLAG_SND_UNA_ADVANCED && !newly_lost) {

sndcnt = min_t(int, delta,

max_t(int, tp->prr_delivered - tp->prr_out,

newly_acked_sacked) + 1);

} else {

sndcnt = min(delta, newly_acked_sacked);

}

/* Force a fast retransmit upon entering fast recovery */

sndcnt = max(sndcnt, (tp->prr_out ? 0 : 1));

tp->snd_cwnd = tcp_packets_in_flight(tp) + sndcnt;

}丢包窗口限制

同上,见tcp_enter_loss慢启动阈值更新;

在进入cwr/loss状态,会调用拥塞控制的ssthresh函数,进行慢启动阈值更新;

cubic:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31//做了两件事:重赋值last_max_cwnd、返回新的慢启动阈值

static u32 bictcp_recalc_ssthresh(struct sock *sk)

{//论文说这个函数在Packet loss时调用

const struct tcp_sock *tp = tcp_sk(sk);

struct bictcp *ca = inet_csk_ca(sk);

ca->epoch_start = 0; /* 发生拥塞状态切换,标志一个epoch结束 end of epoch */

/* Wmax and fast convergence */

//当一个新的TCP流加入到网络,

//网络中已有TCP流需要放弃自己带宽,

//给新的TCP流提供一定的上升空间。

//为提高已有TCP流所释放的带宽而引入快速收敛机制。

if (tp->snd_cwnd < ca->last_max_cwnd && fast_convergence)

//snd_cwnd<last_max_cwnd

//表示已有TCP流所经历的饱和点因为可用带宽改变而正在降低。

//然后,通过进一步降低Wmax让已有流释放更多带宽。

//这种行为有效地延长已有流增大其窗口的时间,

//因为降低后的Wmax强制已有流更早进入平稳状态。

//这允许新流有更多的时间来赶上其窗口尺寸。

ca->last_max_cwnd = (tp->snd_cwnd * (BICTCP_BETA_SCALE + beta))

/ (2 * BICTCP_BETA_SCALE); //last_max_cwnd = 0.9 * snd_cwnd

else

ca->last_max_cwnd = tp->snd_cwnd;

ca->loss_cwnd = tp->snd_cwnd;

//修改snd_ssthresh,即max(0.7*snd_cwnd,2)

return max((tp->snd_cwnd * beta) / BICTCP_BETA_SCALE, 2U);

}

bbr拥塞控制算法:

bbr实现了哪些函数:

1

2

3

4

5

6

7

8

9

10

11

12

13

14static struct tcp_congestion_ops tcp_bbr_cong_ops __read_mostly = {

.flags = TCP_CONG_NON_RESTRICTED,

.name = "bbr",

.owner = THIS_MODULE,

.init = bbr_init,//初始化

.cong_control = bbr_main,//拥塞窗口增减总控

.sndbuf_expand = bbr_sndbuf_expand,//当前版本未实现

.undo_cwnd = bbr_undo_cwnd,//根据bbr理论,不需要这个

.cwnd_event = bbr_cwnd_event,//在开始发包且app未充分利用信道才会处理。

.ssthresh = bbr_ssthresh,//Entering loss recovery, so save cwnd for when we exit or undo recovery.

.min_tso_segs = bbr_min_tso_segs,

.get_info = bbr_get_info,//用于获取bbr结构相关信息

.set_state = bbr_set_state,//设置bbr内部维护的协议栈上的拥塞状态

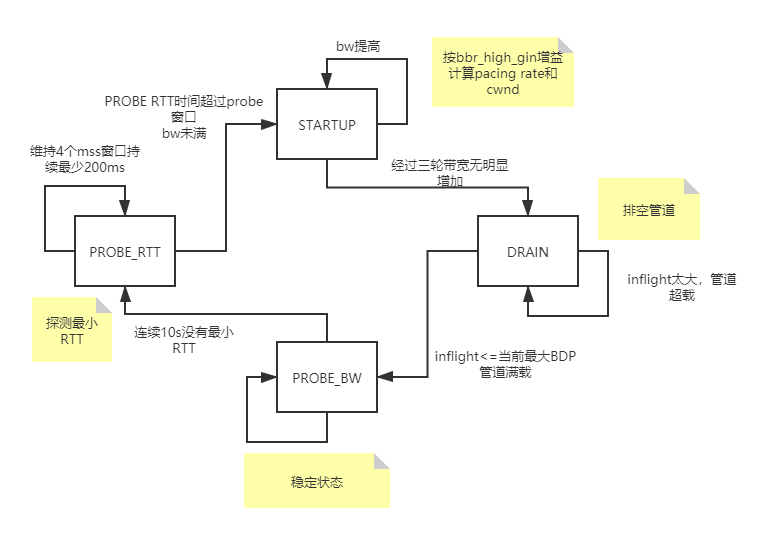

};bbr的四种模式(状态)

1

2

3

4

5

6

7

8

9

10

11

12

13

1478 /* BBR has the following modes for deciding how fast to send: */

79 /*

80 BBR 四种模式:

81 STARTUP:快速开始抢占带宽

82 DRAIN:清空启动期间创建的任何队列

83 PROBE_BW: 周期测试最佳带宽

84 PROBE_RTT:清空发出的包计算minRTT

85 */

86 enum bbr_mode {

87 BBR_STARTUP, /* ramp up sending rate rapidly to fill pipe */

88 BBR_DRAIN, /* drain any queue created during startup */

89 BBR_PROBE_BW, /* discover, share bw: pace around estimated bw */

90 BBR_PROBE_RTT, /* cut inflight to min to probe min_rtt */

91 };bbr的算法过程:

ref:https://switch-router.gitee.io/blog/bbr1/

ref: linux5.13.1源码,bbr rfcbbr两个重要调控参数:

- pacing_rate:BBR.pacing_rate: The current pacing rate for a BBR flow, which controls inter-packet spacing.

BBR 流的当前行进速率,它控制数据包间的间距。 - cwnd: cwnd: The transport sender’s congestion window, which limits the amount of data in flight.

传输发送方的拥塞窗口,它限制了传输中的数据量。和bdp挂钩

从上面知道了cwnd如何影响发送。那pacing_rate如何影响发送速度?

内核在4.x后支持了pacing的功能,不记得是默认关还是,可以调节发包速度,根据sock->sk_pacing_rate的值来;

而bbr在计算pacing_rate后,会通过bbr_set_pacing_rate更新这个值。

而如何调控,其实内核通过qdisc等机制,具体查sk_pacing_rate在哪里被使用就知道了。

- 设计思想:

控制时机提前,不再等到丢包时再进行暴力限制,而是控制稳定的发包速度,尽量榨干带宽,却又不让报文在中间设备的缓存队列上累积。

为了得到稳定的发包速度,BBR 使用 TCP Pacing 进行发包控制,因此 BBR 的实现也需要底层支持 TCP Pacing; 为了榨干带宽,BBR 会周期性地去探测是否链路条件变好了,

如果是,则加大发送速率; 为了不让报文在中间设备的缓存队列上累积,BBR 会周期性地探测链路的最小 RTT,并使用该最小 RTT 计算发包速率。

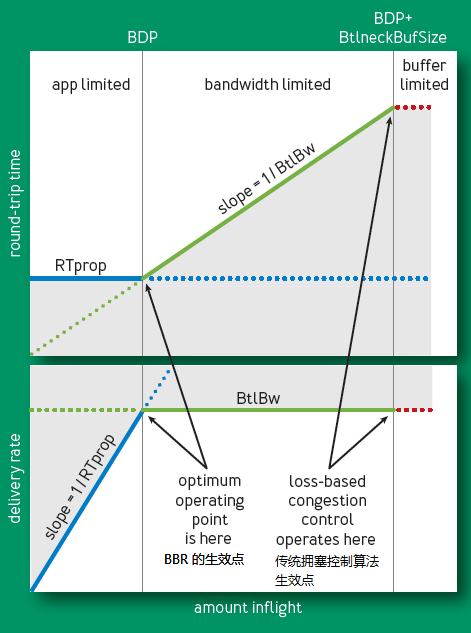

这张图分为上下两部分:上半部分的 Y 轴是 RTT,下半部分的 Y 轴则表示 delivery rate (也就是 estimated bandwidth)。特别注意的是 X 轴不是时间,而是 amount inflight,也就是在途报文的数量。

整个图从左到右可分为 3 个区域:1. app limited, 2. bandwidth limited, 3. buffer limited

app limited

这个区域中,这条流上流量较小,没有充分利用信道,不存在阻塞的情况,delivery rate 线性增加,因此 RTT 保持不变,为链路的固有往返时延(RTprop)。bandwidth limited

这个区域表示 inflight 报文数量超过了 BDP,超过的部分是被中间设备缓存,最终表现出来就是 delivery rate 不变(只跟 bandwidth 有关),而 RTT 由于中间设备缓存 queue 的存在而线性增加。buffer limited

inflight 报文数量继续增加,超过 BDP 的部分最终也超过了中间设备的缓存极限,出现丢包。

BBR 追求的目标是保持 inflight 报文数量就为 BDP,这样既充分利用了 bandwidth,每个报文又能享受到 RTprop。

尽管 bandwith 和 RTT 都是可测量的,但很遗憾的是它们不能被同时探测!因为rtt要测量最小值,需要用比较低的速率去测量,较大的速率可能带来高延迟。

- bbr状态机:来自一个网站的图:

1

2

3

4

5

6

7

8

9

10

11

12

13

14|

V

+---> STARTUP ----+

| | |

| V |

| DRAIN ----+

| | |

| V |

+---> PROBE_BW ----+

| ^ | |

| | | |

| +----+ |

| |

+---- PROBE_RTT <--+

每次收到一个ack的处理:

会走遍所有流程,但是有些流程是某个状态下才会处理,所以也就包含了状态机处理过程。延迟对bbr计算的影响

延迟会影响ack的统计,影响对bw的估算,从而影响了pacing_rate和cwnd, 另外接收方的延迟ack会导致ack聚集

也会导致带宽缺口。丢包对bbr计算的影响

bbr通过检测丢包,也判断是否为中间设备限速的情况,此时要以较低的速率发送。具体见下。每收到一个ack的处理:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21static void bbr_main(struct sock *sk, const struct rate_sample *rs)

{

struct bbr *bbr = inet_csk_ca(sk);

u32 bw;

bbr_update_model(sk, rs);

bw = bbr_bw(sk);//计算bw

bbr_set_pacing_rate(sk, bw, bbr->pacing_gain);//设置速度

bbr_set_cwnd(sk, rs, rs->acked_sacked, bw, bbr->cwnd_gain);//设置拥塞窗口

}

static void bbr_update_model(struct sock *sk, const struct rate_sample *rs)

{

bbr_update_bw(sk, rs);//估计带宽:更新rtt周期,计算带宽,将带宽和minrtt加入新的rtt,bw样本

bbr_update_ack_aggregation(sk, rs);//计算inflight:计算预期的ack,重置ack统计周期,计算超出预期的多余数据,维护extra_acked最大值

bbr_update_cycle_phase(sk, rs);//增益循环PROBE_BW

bbr_check_full_bw_reached(sk, rs);//填充BDP管道

bbr_check_drain(sk, rs);//排空队列

bbr_update_min_rtt(sk, rs);//更新最小rtt

bbr_update_gains(sk);//更新增益

}分析之前看下预备结构:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24/* A rate sample measures the number of (original/retransmitted) data

* packets delivered "delivered" over an interval of time "interval_us".

* The tcp_rate.c code fills in the rate sample, and congestion

* control modules that define a cong_control function to run at the end

* of ACK processing can optionally chose to consult this sample when

* setting cwnd and pacing rate.

* A sample is invalid if "delivered" or "interval_us" is negative.

*/

struct rate_sample {

u64 prior_mstamp; /* starting timestamp for interval */

u32 prior_delivered; /* tp->delivered at "prior_mstamp" */

s32 delivered; /* number of packets delivered over interval */

long interval_us; /* time for tp->delivered to incr "delivered" */

u32 snd_interval_us; /* snd interval for delivered packets */

u32 rcv_interval_us; /* rcv interval for delivered packets */

long rtt_us; /* RTT of last (S)ACKed packet (or -1) */

int losses; /* number of packets marked lost upon ACK */

u32 acked_sacked; /* number of packets newly (S)ACKed upon ACK */

u32 prior_in_flight; /* in flight before this ACK */

bool is_app_limited; /* is sample from packet with bubble in pipe? */

bool is_retrans; /* is sample from retransmission? */

bool is_ack_delayed; /* is this (likely) a delayed ACK? */

};

上面这个rate_sample是在tcp_ack 调用tcp_rate_gen(sk, delivered, lost, is_sack_reneg, sack_state.rate); 计算所得,具体去看源码,这里先跳过。分析:

bbr_update_bw(sk, rs);

这个函数用来更新带宽,从注释:

/* Estimate the bandwidth based on how fast packets are delivered */

我们知道是基于包传递的多快来估计带宽,而带宽,常规来说是每秒发送的bit数,常和速度类别,但其实要考虑延迟,更好的理解是带宽容量。

从代码上看,其实就是依赖外部在采样时间得到的传递的包数/采样时间us得到速率1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53对应rfc 4.5.2.4. Updating the BBR.max_bw Max Filter

static void bbr_update_bw(struct sock *sk, const struct rate_sample *rs)

{

struct tcp_sock *tp = tcp_sk(sk);

struct bbr *bbr = inet_csk_ca(sk);

u64 bw;

bbr->round_start = 0;

if (rs->delivered < 0 || rs->interval_us <= 0)

return; /* Not a valid observation */

/* See if we've reached the next RTT */ //收到ack调用的,所以这里可以判断rtt)

if (!before(rs->prior_delivered, bbr->next_rtt_delivered)) {

bbr->next_rtt_delivered = tp->delivered;

bbr->rtt_cnt++;//更新rtt数量

bbr->round_start = 1;//标记开始一个tx->ack的回合

bbr->packet_conservation = 0;//保护?

}

bbr_lt_bw_sampling(sk, rs);//见下分析

/* Divide delivered by the interval to find a (lower bound) bottleneck

* bandwidth sample. Delivered is in packets and interval_us in uS and

* ratio will be <<1 for most connections. So delivered is first scaled.

*/

bw = div64_long((u64)rs->delivered * BW_UNIT, rs->interval_us);//采样时间内确认的包数/单位时间,BW_UNIT为1<<24,

//前面是算出每us的包数,这里乘以一个系数2^24,后面要除去,注意这里没转换为bps..所以是个比较小的值。

//这个采样时间是在bbr之外定的,一般大于min_rtt,这里暂时不深究。

//这里为啥要乘以一个系数?来看源码解释:

/* Scale factor for rate in pkt/uSec unit to avoid truncation in bandwidth pkt/uSec 单位中速率的比例因子,以避免带宽截断估计。

* estimation. The rate unit ~= (1500 bytes / 1 usec / 2^24) ~= 715 bps.

//即 1500*8 * 1000000(1s=1000000us) / 2^24 ~= 715bps,1500为一个mtu,这里算的是一个速率单位,即通过每us的包数,转换为每s的bit数 再/ 2^24

* This handles bandwidths from 0.06pps (715bps) to 256Mpps (3Tbps) in a u32.//若0.06表示715,则可以存储更多值

* Since the minimum window is >=4 packets, the lower bound isn't

* an issue. The upper bound isn't an issue with existing technologies.//因为最小窗口>=4,所以下限不是问题。

*/

/* If this sample is application-limited, it is likely to have a very

* low delivered count that represents application behavior rather than

* the available network rate. Such a sample could drag down estimated

* bw, causing needless slow-down. Thus, to continue to send at the

* last measured network rate, we filter out app-limited samples unless

* they describe the path bw at least as well as our bw model.

*

* So the goal during app-limited phase is to proceed with the best

* network rate no matter how long. We automatically leave this

* phase when app writes faster than the network can deliver :)

*/

if (!rs->is_app_limited || bw >= bbr_max_bw(sk)) { //和测量的最大带宽相比并更新,这里是采样的最大带宽

/* Incorporate new sample into our max bw filter. */

minmax_running_max(&bbr->bw, bbr_bw_rtts, bbr->rtt_cnt, bw);

}

}处理第三类丢包:被路由器限速:

如果发生监管丢包,BBR会在一段比较长的周期内检测到,它发现在这个周期内持续持有比较高的丢包率(检测到的Lost计数器偏大),且速率保持一致,那么BBR会将发送量限制在实际带宽的平均值。

static void bbr_lt_bw_sampling(struct sock *sk, const struct rate_sample *rs)

具体分析略

ref:https://www.linuxidc.com/Linux/2016-10/136259.htmbbr_update_ack_aggregation(sk, rs) 计算inflight

Estimates the windowed max degree of ack aggregation.估计 ack 聚合的窗口最大程度。

这个有点不太好理解,直接看代码:

对应rfc: 4.5.5. BBR.extra_acked

ref:关于延迟ack,ack 聚集到达:

https://www.shuzhiduo.com/A/WpdKnaqX5V/

简单的说,就是在某种情况下会有大量ack延迟到达,而在延迟之前,cwnd被递减

发送减缓,等到这些突发的ack聚集到达时,需要快速恢复,这里会出现一个

带宽缺口,所以bbr从cwnd递减时机 和快速恢复两点,对带宽进行补偿

不仅如此,延迟到达的ack会影响delivered packet从而影响bw的计算,影响了pacing rate和cwnd,所以需要做补偿

1 | /* Estimates the windowed max degree of ack aggregation. |

3)bbr_update_cycle_phase(sk, rs);//增益循环PROBE_BW

解释:

这个函数做了什么:

当当前处于 ProbeBW状态时且到时间切换到下个增益,会去更新cycle_idx。

1 | bbr->cycle_idx = (bbr->cycle_idx + 1) & (CYCLE_LEN - 1); |

bbr维护了一个增益列表,在过程中会去更新pacing_gain,这个

值会添加增益列表中对应cycle_idx索引下的值。

BBR 定义了一个增益循环(gain cycling)的概念,将增益系数作用到 pacing rate 上,以此控制报文发送速度。

ref:

具体的定义是,一个 cycle 包含 8 个 phase,每个 phase 的持续时间为 1 个 min RTT。8 个 phase 的 gain 分别为:1.25、0.75、1、1、1、1、1、1。当处于 gain 为 1.25 的 phase 时,意味着 pacing rate 是当前计算的合理值的 1.25 倍,此时 BBR 发送速率也会变成正常情况下的 1.25 倍(踩油门)。而当处于 gain 为 0.75 的 phase 时,相应的发送速率是正常情况的 0.75 倍(踩刹车)。而 gain 为 1 时,发送速率就是正常值(巡航).具体的定义是,一个 cycle 包含 8 个 phase,每个 phase 的持续时间为 1 个 min RTT。8 个 phase 的 gain 分别为:1.25、0.75、1、1、1、1、1、1。当处于 gain 为 1.25 的 phase 时,意味着 pacing rate 是当前计算的合理值的 1.25 倍,此时 BBR 发送速率也会变成正常情况下的 1.25 倍(踩油门)。而当处于 gain 为 0.75 的 phase 时,相应的发送速率是正常情况的 0.75 倍(踩刹车)。而 gain 为 1 时,发送速率就是正常值(巡航).

BBR 一脚油门一脚刹车的组合保证了当链路带宽不变时,这条流不会向链路灌入超量的报文。而 6/8 时间段里的 gain 为 1 又使得大部分时候,采用 BBR 控制的 流发送报文的 pacing rate 是稳定的。

– 为什么需要这样做?什么时候加速,稳定,减速?

参考rfc: 4.3.3. ProbeBW

static void bbr_update_gains(struct sock *sk)

增益列表的定义:

1 | /* The pacing_gain values for the PROBE_BW gain cycle, to discover/share bw: */ |

- bbr_check_full_bw_reached 检查是否到最大带宽

协议描述:4.3.1.2. Exiting Startup Based on Bandwidth Plateau1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44/* Estimate when the pipe is full, using the change in delivery rate: BBR

* estimates that STARTUP filled the pipe if the estimated bw hasn't changed by

* at least bbr_full_bw_thresh (25%) after bbr_full_bw_cnt (3) non-app-limited

* rounds. Why 3 rounds: 1: rwin autotuning grows the rwin, 2: we fill the

* higher rwin, 3: we get higher delivery rate samples. Or transient

* cross-traffic or radio noise can go away. CUBIC Hystart shares a similar

* design goal, but uses delay and inter-ACK spacing instead of bandwidth.

*/

使用delivery rate的变化来估计管道何时充满:BBR

如果在 bbr_full_bw_cnt (3次) 非应用程序限制轮次之后估计的 bw 没有改变至少 bbr_full_bw_thresh (25%),

则估计 STARTUP 填充了管道。 为什么要进行 3 轮:

1:rwin 自动调整使 rwin 增长,

2:我们填充更高的 rwin,

3:我们获得更高的delivery rate样本。 或者瞬态交叉流量或无线电噪声可以消失。

3次相当于给恢复的机会,或容错成本

CUBIC Hystart 具有类似的设计目标,但使用延迟和 ACK 间间隔而不是带宽。

static void bbr_check_full_bw_reached(struct sock *sk,

const struct rate_sample *rs)

{

struct bbr *bbr = inet_csk_ca(sk);

u32 bw_thresh;

if (bbr_full_bw_reached(sk) || !bbr->round_start || rs->is_app_limited)//如果已经达到full bw( /* reached full bw in Startup? */)

//或者round 还没开始当前在app发送没充分利用信道带宽的情况下,直接返回

return;

/* To estimate if BBR_STARTUP mode (i.e. high_gain) has filled pipe... */

/* If bw has increased significantly (1.25x), there may be more bw available: */

static const u32 bbr_full_bw_thresh = BBR_UNIT * 5 / 4;

bw_thresh = (u64)bbr->full_bw * bbr_full_bw_thresh >> BBR_SCALE;//注意这里用1.25倍上次的最大值。

//和协议4.3.1.2. 上的算法是符合的。

if (bbr_max_bw(sk) >= bw_thresh) {//max算出来的比上次的大,说明还在增长。

bbr->full_bw = bbr_max_bw(sk);//只有这里更新>0的值。

bbr->full_bw_cnt = 0;

return;

}

++bbr->full_bw_cnt;//否则累计,三次不增长则说明到最大值了。

bbr->full_bw_reached = bbr->full_bw_cnt >= bbr_full_bw_cnt;//达到三次认为是达到最大值。

/* But after 3 rounds w/o significant bw growth, estimate pipe is full: */

//static const u32 bbr_full_bw_cnt = 3;

}

5)bbr_check_drain 状态转移到drain 排空

这里为什么要排空?为了避免在startUp估计的速率太大,和当前的bdp不适配。

在 Drain 中,BBR 旨在通过切换到远低于 1.0 的 pacing_gain 来快速排空 Startup 中创建的任何队列,直到任何估计的队列被排空。

它使用一个 pacing_gain,它是启动期间使用的值的倒数,选择尝试在一轮中耗尽队列

rfc:4.3.2. Drain Drain状态

如何排空:

bbr_update_gains 当前是drain状态时:

1 | case BBR_DRAIN: |

1 | /* If pipe is probably full, drain the queue and then enter steady-state. */ |

这里涉及到了bdp,bdp是带宽时延积,简单理解就是在充满网络管道的大小,或者管道容量,它通过:

时延带宽积 = 时延 × 带宽

计算出。而拥塞控制窗口,其实是在空中传输的包数,那这里需要限制在空中传输的包数不能超过bdp,也就是带宽时延积,在我们能容忍的rtt下。

时延通过rtt算出。

- bbr_update_min_rtt 周期更新最小rtt,是为了计算最大的bdp。

4.3.4. ProbeRTT

1 | /* The goal of PROBE_RTT mode is to have BBR flows cooperatively and |

- bbr_update_gains(sk);

这个函数主要是更新增益,在Drain中,也是依赖这个函数的调用,达到耗尽队列的目的

因为每个状态都可能更新pacing_gain和cwnd_gain,所以这里统一更新。

1 | 4.3.2. Drain Drain状态 |

bw = bbr_bw(sk);

1

2

3

4

5

6

7

8

9/* Return the estimated bandwidth of the path, in pkts/uS << BW_SCALE. */

static u32 bbr_bw(const struct sock *sk)

{

struct bbr *bbr = inet_csk_ca(sk);

return bbr->lt_use_bw ? bbr->lt_bw : bbr_max_bw(sk);

//返回,如果发生路由器限速的情况,则用该情况测试出来的带宽,否则用

//当前测出的最大带宽

}bbr_set_pacing_rate(sk, bw, bbr->pacing_gain);

这一步是更新最后参数的两个步骤之一:更新pacing_rate:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38/* Pace using current bw estimate and a gain factor. */

static void bbr_set_pacing_rate(struct sock *sk, u32 bw, int gain)

{

struct tcp_sock *tp = tcp_sk(sk);

struct bbr *bbr = inet_csk_ca(sk);

unsigned long rate = bbr_bw_to_pacing_rate(sk, bw, gain);//通过bw算出pacing rate

if (unlikely(!bbr->has_seen_rtt && tp->srtt_us))//如果还没采样到rtt,并且之前有测过得到平滑的

rtt了,则尝试通过平滑的rtt来算pacing_rate,并更新到sk->sk_pacing_rate中

bbr_init_pacing_rate_from_rtt(sk);

if (bbr_full_bw_reached(sk) || rate > sk->sk_pacing_rate)//更新pacing rate

sk->sk_pacing_rate = rate;

}

/* Convert a BBR bw and gain factor to a pacing rate in bytes per second. */

static unsigned long bbr_bw_to_pacing_rate(struct sock *sk, u32 bw, int gain)

{

u64 rate = bw;

rate = bbr_rate_bytes_per_sec(sk, rate, gain);

rate = min_t(u64, rate, sk->sk_max_pacing_rate);

return rate;

}

/* Return rate in bytes per second, optionally with a gain.

* The order here is chosen carefully to avoid overflow of u64. This should

* work for input rates of up to 2.9Tbit/sec and gain of 2.89x.

*/

static u64 bbr_rate_bytes_per_sec(struct sock *sk, u64 rate, int gain)

{

unsigned int mss = tcp_sk(sk)->mss_cache;

rate *= mss;//mss大小,byte

rate *= gain;//这里是乘以pacing_gain,也是循环增益那里选择的倍数

rate >>= BBR_SCALE;//右移8位,即除以2^8 256

rate *= USEC_PER_SEC / 100 * (100 - bbr_pacing_margin_percent);(1000000L/100*(100-1))

return rate >> BW_SCALE;//右移24位

}更新 cwnd

bbr_set_cwnd(sk, rs, rs->acked_sacked, bw, bbr->cwnd_gain);1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43/* Slow-start up toward target cwnd (if bw estimate is growing, or packet loss

* has drawn us down below target), or snap down to target if we're above it.

*/

static void bbr_set_cwnd(struct sock *sk, const struct rate_sample *rs,

u32 acked, u32 bw, int gain)

{

struct tcp_sock *tp = tcp_sk(sk);

struct bbr *bbr = inet_csk_ca(sk);

u32 cwnd = tp->snd_cwnd, target_cwnd = 0;

if (!acked)//若当前的ack没有acked任何包,则跳过

goto done; /* no packet fully ACKed; just apply caps */

//在外部拥塞控制状态为recovery或丢包等外部参数给进来判断,控制拥塞窗口大小

if (bbr_set_cwnd_to_recover_or_restore(sk, rs, acked, &cwnd))

goto done;

//目标拥塞窗口大小为bdp

target_cwnd = bbr_bdp(sk, bw, gain);

/* Increment the cwnd to account for excess ACKed data that seems

* due to aggregation (of data and/or ACKs) visible in the ACK stream.

*/

//加上补偿

target_cwnd += bbr_ack_aggregation_cwnd(sk);

target_cwnd = bbr_quantization_budget(sk, target_cwnd);

/* If we're below target cwnd, slow start cwnd toward target cwnd. *///若小于target,则slow start

if (bbr_full_bw_reached(sk)) /* only cut cwnd if we filled the pipe */

cwnd = min(cwnd + acked, target_cwnd);

//若当前拥塞窗口小于目标拥塞窗口或已成功传递的数量小于10,则用当前拥塞窗口+acked

else if (cwnd < target_cwnd || tp->delivered < TCP_INIT_CWND)

cwnd = cwnd + acked;

cwnd = max(cwnd, bbr_cwnd_min_target);//后者为4,至少为4

/* TCP initial congestion window as per rfc6928 */

//#define TCP_INIT_CWND 10

done:

tp->snd_cwnd = min(cwnd, tp->snd_cwnd_clamp); /* apply global cap */

if (bbr->mode == BBR_PROBE_RTT) /* drain queue, refresh min_rtt */

tp->snd_cwnd = min(tp->snd_cwnd, bbr_cwnd_min_target);//此时在探测最小rtt,应该减小

//窗口值,<=4,因为要探测最小rtt,发送不能过快

}

状态下的图片和速率参考:

https://switch-router.gitee.io/blog/bbr1/

TODO: startup的速率曲线为什么是线性函数?跟通过cwnd的增长是:cwnd = cwnd + acked; 有关。

关于sack,dack,rack

sack,即选择性确认,可以通过tcp option携带没有收到的seq信息,从而发送方可以选择性重传部分段而不用全部都重传;

dack: D-SACK可以在SACK选项中描述它重复收到的报文段。但是需要注意的是D-SACK只用于报告接收端收到的最后一个报文与已经接收了的报文的重复部分

但sack也有漏洞和风险:

https://www.q578.com/s-5-202844-0/fack:fack是sack的更进一步优化,当使用fack时,sack的数据包个数不再需要超过乱序阀值,fack只关心收到的最大的sack数据包相比snd_una的间距是否超过乱序阀值,

当超过时就会开始标记loss,fack标记loss时,从write队列最左侧开始标记,直至待标记的skb距离最大sack的skb的间距为reordering-1为止;rack:基于时间的RACK:

rack相比fack又更进一步,rack是根据skb的发送时间来判断消息包是否丢失,tcp每发送一个skb时,都会为其分配一个时间戳信息,当sack时,rack机制判断那些在被sack的数据包之前发送的,并且相比被sack的数据包的时间间距到达一定间隔时,就判断该数据包为丢失,进而进入快恢复状态,然后重传被标记丢失的数据包。

关于更多ack参考:

https://www.freesion.com/article/6142762829/

tcp_ack函数分析:

1 | /* This routine deals with incoming acks, but not outgoing ones. */ |